Data whirring around is always good. It means there is progress, things are happening, and there is more to be done. Of course, if you have a huge database it doesn’t make much sense to identify the progress by combing through thousands of rows and who knows how many columns to find values that have been added or changed. Instead, wouldn’t it be nice if there were a simple job to spot the changes, large or small, and present them in a simple list? This post will walk you through the construction of a simple Update Notification job, whose sole purpose is to summarize changes in a table.

Setting up the Job

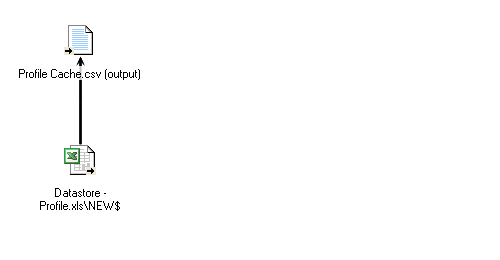

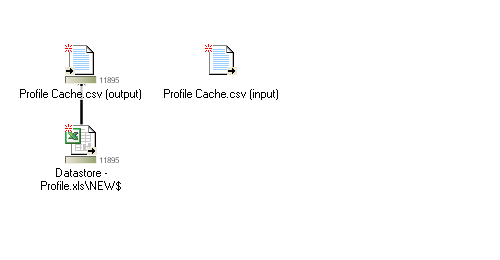

Begin by selecting your input node, a table whose regularly shifting contents you’d like to follow. Perhaps it is where the results of the day’s oxygen-absorption experiments are kept, or maybe it is a running list of email inquiries. Next create an output node, which we’ll call the Cache. This lil’ file is the key to the update system, as it will keep a record of what the input looked like the last time the job was run.

Cute! Run the job to create the output file, then add the new Cache file as a data connection. With your connection defined, drag the Cache input into your job. “But wait, now there are TWO instances of this file!” Yes, it is a little scary, but if you make their names distinct by adding something as simple as “input” and “output,” respectively, everything should be fine.

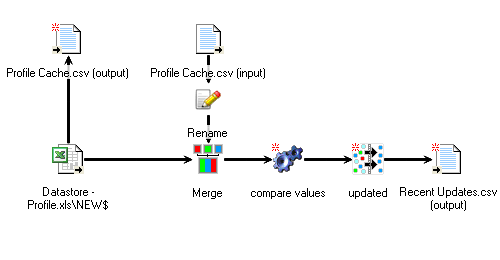

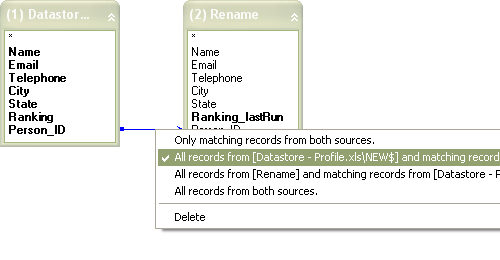

What we need to do now is compare the old values stored in the Cache with the new values from your primary input table, in this case called “Profile.” Time to add some machinery to this job. In order to make the comparison, we first have to bring the old and new values into the same thread, which we’ll do by creating a Merge node and connecting both inputs. When we join the two sources, the Merge node will add extensions to duplicate column headings in order to keep them distinct (“FolderComplete” and “FolderComplete#2″, for example). This is exactly what we want, but why not make life easier? Place a rename node between the Cache input and the Merge, and for every column you want to compare, add an intuitive extension, such as “…_lastRun”, ie “FolderComplete_lastRun,” “Applied_lastRun,” “Hemoglobin_lastRun,” etc. Veera makes it easy to make life easier.

What we need to do now is compare the old values stored in the Cache with the new values from your primary input table, in this case called “Profile.” Time to add some machinery to this job. In order to make the comparison, we first have to bring the old and new values into the same thread, which we’ll do by creating a Merge node and connecting both inputs. When we join the two sources, the Merge node will add extensions to duplicate column headings in order to keep them distinct (“FolderComplete” and “FolderComplete#2″, for example). This is exactly what we want, but why not make life easier? Place a rename node between the Cache input and the Merge, and for every column you want to compare, add an intuitive extension, such as “…_lastRun”, ie “FolderComplete_lastRun,” “Applied_lastRun,” “Hemoglobin_lastRun,” etc. Veera makes it easy to make life easier.

Open up the Merge node and join the two sources at the identifier key, the value used to uniquely identify each record. In the case of this example it is called “Person_ID.” Right click on the join and select “All records from [primary source] and matching records from [Cache].” This is on the assumption that rows are always being added, but not removed. Select all columns from the primary source and all the renamed columns from the Cache you’d like to compare – it isn’t necessary to bring them all over.

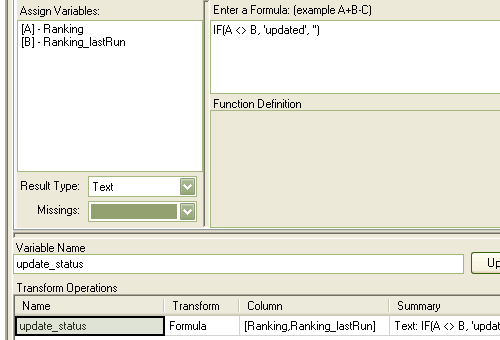

Magnificent. Save and close the node. Now we are ready for the compare itself. Create a Transform node following the Merge. Open up the node and select the “Multi-Variable Formula” tab. From the list at the left, select the values you want to compare, in this case “Ranking” and “Ranking_lastRun.” Give your new variable a name and using the IF function, flag the records where the old and new values are different (“<>” is the symbol for “does not equal”). In this example, the function leaves the new variable empty if the values are the same, and outputs the text string ‘updated’ when they are different.

Magnificent. Save and close the node. Now we are ready for the compare itself. Create a Transform node following the Merge. Open up the node and select the “Multi-Variable Formula” tab. From the list at the left, select the values you want to compare, in this case “Ranking” and “Ranking_lastRun.” Give your new variable a name and using the IF function, flag the records where the old and new values are different (“<>” is the symbol for “does not equal”). In this example, the function leaves the new variable empty if the values are the same, and outputs the text string ‘updated’ when they are different.

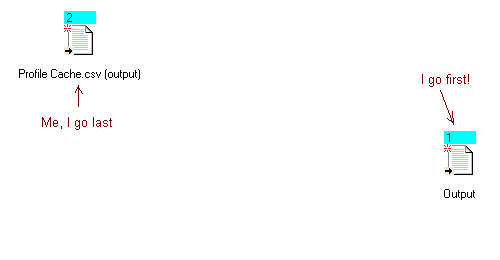

Click the Update button to save your newly created variable, and you’ll be golden. Use a Filter node to grab all the entries where the new variable called ‘update_status’ equals ‘updated’, and pipe all those fresh records into an output node. The system is almost finished, there is only one more thing to do. Click the Run Order button ( ) near the top of the layout window. When you do that, Veera will only display your output nodes, along with a number representing the order in which each node will run. For our job, which has two output nodes, we have to be very particular with our order. Because we don’t want to replace the data in the Cache of old values until AFTER we have compared those old values to the new ones, we want the Cache to receive the new data only after the comparison has happened. How do we do that? Well, we know the output containing our list of updates only outputs after the Transform compare has taken place, so if we have that output run before the Cache output, we will be safe. Click the output nodes in the order in which you want them to run (so click the Update output, then the Cache output). Done!

) near the top of the layout window. When you do that, Veera will only display your output nodes, along with a number representing the order in which each node will run. For our job, which has two output nodes, we have to be very particular with our order. Because we don’t want to replace the data in the Cache of old values until AFTER we have compared those old values to the new ones, we want the Cache to receive the new data only after the comparison has happened. How do we do that? Well, we know the output containing our list of updates only outputs after the Transform compare has taken place, so if we have that output run before the Cache output, we will be safe. Click the output nodes in the order in which you want them to run (so click the Update output, then the Cache output). Done!

) near the top of the layout window. When you do that, Veera will only display your output nodes, along with a number representing the order in which each node will run. For our job, which has two output nodes, we have to be very particular with our order. Because we don’t want to replace the data in the Cache of old values until AFTER we have compared those old values to the new ones, we want the Cache to receive the new data only after the comparison has happened. How do we do that? Well, we know the output containing our list of updates only outputs after the Transform compare has taken place, so if we have that output run before the Cache output, we will be safe. Click the output nodes in the order in which you want them to run (so click the Update output, then the Cache output). Done!

) near the top of the layout window. When you do that, Veera will only display your output nodes, along with a number representing the order in which each node will run. For our job, which has two output nodes, we have to be very particular with our order. Because we don’t want to replace the data in the Cache of old values until AFTER we have compared those old values to the new ones, we want the Cache to receive the new data only after the comparison has happened. How do we do that? Well, we know the output containing our list of updates only outputs after the Transform compare has taken place, so if we have that output run before the Cache output, we will be safe. Click the output nodes in the order in which you want them to run (so click the Update output, then the Cache output). Done!

Click the Run Order button again to return to the regular display, and save your job. Now you have a totally functional Simple Update notifier. Very cool.

Other Tricks

There are a few other useful things you can do with this setup, too. If you wanted to know which rows have been added since the last time the job was run, assuming that the column you’re evaluating is populated for every row, you could check for rows that don’t have a value merged in from the Cache. By changing the join in the Merge node to “all records from both sources,” you could figure out which rows had been removed as well, by scanning for null values in the new data. How handy!

By isolating changes with a setup like this you can do all kinds of cool things beyond merely staying aware of the changes. For example, if you need to manually keep two data sources synchronized you could simplify the task a great deal by limiting the rows you are moving to only those which are new or have changed. I save myself a good hour of time each week by doing just that! Peppered throughout my jobs there are many variations on this theme, useful in a great variety of ways. As a great example, every week in my admissions office I provide a complete list of every candidate within each individual counselor’s territory. Some of these lists are quite lengthy, so I use a somewhat sophisticated Update Notifier to point out important changes that have taken place in a candidate’s application status and communication stream, allowing the counselor to focus their efforts in very deliberate, helpful ways. Beyond making my work far more productive, Veera has done the same for a great number of folks who may never even use the software directly.

-Ryan Moran 12/19/10